NOTE: The method in this post seems more accurate and effective than the one in the previous post on this blog, "killing a stuck VM from the command line."

Instructions on how to forcibly terminate a VM if it is unresponsive to the VI client

Here you will be terminating the Master World and User Worlds for the VM which in turn will terminate the VM's processes.

1. First list the running VMs to determine the VM ID for the affected VM:

#cat /proc/vmware/vm/*/names

vmid=1076 pid=-1 cfgFile="/vmfs/volumes/50823edc-d9110dd9-8994-9ee0ad055a68/vc using sql/vc using sql.vmx" uuid="50 28 4e 99 3d 2b 8d a0-a4 c0 87 c9 8a 60 d2 31" displayName="vc using sql-192.168.1.10"

vmid=1093 pid=-1 cfgFile="/vmfs/volumes/50823edc-d9110dd9-8994-9ee0ad055a68/esx_template/esx_template.vmx" uuid="50 11 7a fc bd ec 0f f4-cb 30 32 a5 c0 3a 01 09" displayName="esx_template"

For this example we will terminate the VM at vmid='1093'

2. We need to find the Master World ID, do this type:

# less -S /proc/vmware/vm/1093/cpu/status

Expand the terminal or scroll until you can see the right-most column. This is labelled 'group'. Unterneath the column you will find: vm.1092.

In this example '1092' is the ID of the Master World.

3. Run this command to terminate the Master World and the VM running in it:

/usr/lib/vmware/bin/vmkload_app -k 9 1092

4. This should kill all the VM's User Worlds and also the VM's processes.

If Successful you will see similar:

# /usr/lib/vmware/bin/vmkload_app --kill 9 1070

Warning: Jul 12 07:24:06.303: Sending signal '9' to world 1070.

If the Master World ID is wrong you may see:

# /usr/lib/vmware/bin/vmkload_app --kill 9 1071

Warning: Jul 12 07:21:05.407: Sending signal '9' to world 1071.

Warning: Jul 12 07:21:05.407: Failed to forward signal 9 to cartel 1071: 0xbad0061

source

petrichor (/'pe - tri - kor'/) is the familiar scent of rain on dry earth

this tech blog is the wafting fragrance of my geeky outpourings, one post at a time

Showing posts with label esx. Show all posts

Showing posts with label esx. Show all posts

Wednesday, March 19, 2008

Tuesday, March 27, 2007

esx: obtaining a vm's ip address from the command line

You can get a VM's IP adrress just using:

When the guest operating system is running inside a virtual machine, you can pass information

from a script (running in another machine) to the guest operating system, and from the guest

operating system back to the script, through the VMware Tools service. You do this by using a class of shared variables, commonly referred to as GuestInfo.

VMware Tools must be installed and running in the guest operating system before a GuestInfo variable can be read or written inside the guest operating system. (source: VMware Scripting API - 2.3 User's Manual)

source

vmware-cmd [vmx_path] getguestinfo "ip"

When the guest operating system is running inside a virtual machine, you can pass information

from a script (running in another machine) to the guest operating system, and from the guest

operating system back to the script, through the VMware Tools service. You do this by using a class of shared variables, commonly referred to as GuestInfo.

VMware Tools must be installed and running in the guest operating system before a GuestInfo variable can be read or written inside the guest operating system. (source: VMware Scripting API - 2.3 User's Manual)

source

Monday, March 26, 2007

esx: killing a stuck vm from the command line

esx: stopping a vm from the command line using powerop_mode

Login to the Service Console and try the following:

where [vm-cfg-path] is the location of the vmx file for the VM and [powerop_mode] is either hard, soft or trysoft .

It is tempting to just use hard for the [powerop_mode] when it appears that the VM is really stuck :)

source

vmware-cmd [vm-cfg-path] stop [powerop_mode]

where [vm-cfg-path] is the location of the vmx file for the VM and [powerop_mode] is either hard, soft or trysoft .

It is tempting to just use hard for the [powerop_mode] when it appears that the VM is really stuck :)

source

Wednesday, March 21, 2007

esx: ide vs sata

Installation on IDE or SATA Drives:

(source: vi3_esx_quickstart.pdf)

The installer displays a warning if you attempt to install ESX Server software on an IDE drive or a SATA drive in ATA emulation mode. It is possible to install and boot ESX Server software on an IDE drive. However, VMFS, the filesystem on which virtual machines are stored, is not supported on IDE or SATA. An ESX Server host must have SCSI storage, NAS, or a SAN on which to store virtual machines.

(source: vi3_esx_quickstart.pdf)

The installer displays a warning if you attempt to install ESX Server software on an IDE drive or a SATA drive in ATA emulation mode. It is possible to install and boot ESX Server software on an IDE drive. However, VMFS, the filesystem on which virtual machines are stored, is not supported on IDE or SATA. An ESX Server host must have SCSI storage, NAS, or a SAN on which to store virtual machines.

Monday, March 5, 2007

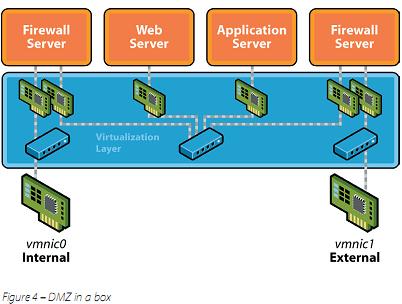

esx: DMZ within a single esx box

DMZ in a box

In this example, we have four virtual machines running two Firewalls, a Web server and an Application Server to create a DMZ. The Web server and Application server sit in the DMZ between the two firewalls. External traffic from the Internet (labeled External) is verified by the firewall inside the VM, and if authorized routed to the virtual switch in the DMZ – the switch in the middle. The Web Server and Application Server are connected to this switch and hence can serve external requests.

This switch is also connected to a firewall that sits between the DMZ and the internal corporate network (labeled Internal). This second firewall filters packets and if verified, routes them to the VMNIC0, connected to the internal corporate network. Hence a complete DMZ can be built inside a single ESX Server. Because of the isolation between the various virtual machines, even if one of them were to be compromised by, say, a virus the

other virtual machines would be unaffected.

Source1

Source2: tommy walker ppt - Virtualization Reducing Costs, Time and Effort with VMware (2002)

Friday, March 2, 2007

esx: you can't run it on a vm!

Running ESX on a VM - vmware.esx-server | Google Groups:

">On Feb 26, 7:03 pm, "yy" ...@yahoo.com.ph> wrote:

>Has anyone successfully setup/ran an ESX on a Virtual Machine? I need

> to do this as a proof of concept before dealing with real hardware.

ESX server won't run on in a VM virtualized by ESX server.

I've tried. There is something ESX looks for in the CPU that is not

virtualized by ESX server."

">On Feb 26, 7:03 pm, "yy" ...@yahoo.com.ph> wrote:

>Has anyone successfully setup/ran an ESX on a Virtual Machine? I need

> to do this as a proof of concept before dealing with real hardware.

ESX server won't run on in a VM virtualized by ESX server.

I've tried. There is something ESX looks for in the CPU that is not

virtualized by ESX server."

Thursday, February 22, 2007

esx: esx 3.0 feature highlights

- NAS and iSCSI Support

ESX Server 2.x could store virtual machines only on SCSI disks and on Fibre Channel SANs. ESX Server 3.0 can store virtual machines on NAS and iSCSI. iSCSI LUNs, like Fibre Channel LUNs, can be formatted with the VMware file system (VMFS). Each virtual machine resides in a single directory. Network attached storage (NAS) appliances must present file systems over the NFS protocol for ESX Server to be able to use them. NFS mounts are used like VMFS with ESX Server creating one directory for each virtual machine.Four-way Virtual SMP and 16 GB Memory Available to Guest Operating Systems

Virtual machines can now have up to 4 processors (up from 2) and 16 GB of RAM (up from 3.6 GB) allocated to them.

- ESX Server Clusters

VirtualCenter 2.x introduces the notion of a cluster of ESX Server hosts. A cluster is a collection of hosts that can be managed as a single entity. The resources from all the hosts in a cluster are aggregated into a single pool. A cluster looks like a stand-alone host, but it typically has more resources available.

- 64-Bit OS Virtual Machines

64-bit guest operating systems are experimentally supported and visible in the Virtual Infrastructure Client interface, with full support available in future VI3 releases.

- Hot-Add Virtual Disk

ESX Server 3.0 supports adding new virtual disks to a virtual machine while it is running. This is useful with guest operating systems capable of recognizing hot-add hardware. - VMFS 3

There is a new generation of VMFS in ESX Server 3.0. Scalability, performance, and reliability have all improved. Furthermore, subdirectories are now supported. ESX Server system will create a directory for each virtual machine and all its component files.

- Potential Scalability Bottlenecks Have Been Removed

In ESX Server 2.x, one vmx process per running virtual machine ran in the service console to implement certain virtual machine functionality. In ESX Server 3.x, these processes are no longer bound to the service console but instead are distributed across a server's physical CPUs.

- New Guest SDK Available

The VMware Guest SDK allows software running in the guest operating system in a VMware ESX Server 3.0 virtual machine to collect certain data about the state and performance of the virtual machine. Download the Guest SDK package from www.vmware.com/support/developer/

- VMware Tools Auto-Upgrade

VMware Infrastructure 3 (ESX Server 3.0/VirtualCenter 2.0) supports the ability to install or upgrade VMware Tools on multiple virtual machines at the same time without needing to interact with each virtual machine. Detailed instructions are provided in the Installation and Upgrade Guide.

esx: vmfs 2 overview

(source: vmware)

While conventional file systems allow only one server to have read-write access to the same file at a given time, VMFS is a cluster file system that leverages shared storage to allow multiple instances of ESX Server

to read and write to the same storage, concurrently. VMFS allows :

While conventional file systems allow only one server to have read-write access to the same file at a given time, VMFS is a cluster file system that leverages shared storage to allow multiple instances of ESX Server

to read and write to the same storage, concurrently. VMFS allows :

- Easier VM management: Greatly simplify virtual machine provisioning and administration by efficiently

storing the entire virtual machine state in a central location. - Live Migration of VMS: Support unique virtualization-based capabilities such as live migration of running virtual machines from one physical server to another, automatic restart of a failed virtual machine on a separate physical server, and clustering virtual machines across different physical servers.

- Performance: Get virtual disk performance close to native SCSI for even the most data-intensive applications with dynamic control of virtual storage volumes.

- Concurrent access to storage: Enable multiple installations of ESX Server to read and write from the same storage location concurrently.

- Dynamic ESX Server Modification: Add or delete an ESX Server from a VMware VMFS volume without disrupting other ESX Server hosts.

- VMFS volume resizing on the fly: Create

new virtual machines without relying on a storage administrator.

Adaptive block sizing and addressing for growing files allows you to

increase a VMFS volume on the fly. - Automatic LUN mapping: Simplify storage management with automatic discovery and mapping of LUNs to a VMware VMFS volume.

- I/O parameter tweaking: Optimize your virtual machine I/O with adjustable volume, disk, file and block sizes.

- Failure recovery: Recover virtual machines faster and more reliably in the event of server failure with Distributed journaling.

VMware VMFS cluster file system is included in VMware Infrastructure

Enterprise and Standard Editions and is available for local storage

only with the Starter edition.

esx: the vmfs file system

VMFS - Wikipedia: "VMFS is VMware's SAN file system. (Other examples of SAN file systems are Global File System, Oracle Cluster File System). VMFS is used solely in the VMware flagship server product, the ESX. It was developed and is used to store virtual machine disk images, including snapshots.

- Multiple servers can read/write the same filesystem simultaneously, while

- Individual virtual machine files are locked

- VMFS volumes can be logically 'grown' (nondestructively increased in size) by spanning multiple VMFS volumes together.

There are three versions of VMFS: VMFS1, VMFS2 and VMFS3.

* VMFS1 was used by the ESX 1.x which is not sold anymore. It didn't feature the cluster filesystem properties and was used only by a single server at a time. VMFS1 is a flatfilesystem with no directory structure.

* VMFS2 is used by ESX 2.x, 2.5.x and ESX 3.x. While ESX 3.x can read from VMFS2, it will not mount it for writing. VMFS2 is a flatfilesystem with no directory structure.

* VMFS3 is used by ESX 3.x. As a most noticeable feature, it introduced directory structure in the filesystem. Older versions of ESX can't read or write on VMFS3 volumes. Beginning from ESX 3 and VMFS3, also virtual machine configuration files are stored in the VMFS partition by default."

- Multiple servers can read/write the same filesystem simultaneously, while

- Individual virtual machine files are locked

- VMFS volumes can be logically 'grown' (nondestructively increased in size) by spanning multiple VMFS volumes together.

There are three versions of VMFS: VMFS1, VMFS2 and VMFS3.

* VMFS1 was used by the ESX 1.x which is not sold anymore. It didn't feature the cluster filesystem properties and was used only by a single server at a time. VMFS1 is a flatfilesystem with no directory structure.

* VMFS2 is used by ESX 2.x, 2.5.x and ESX 3.x. While ESX 3.x can read from VMFS2, it will not mount it for writing. VMFS2 is a flatfilesystem with no directory structure.

* VMFS3 is used by ESX 3.x. As a most noticeable feature, it introduced directory structure in the filesystem. Older versions of ESX can't read or write on VMFS3 volumes. Beginning from ESX 3 and VMFS3, also virtual machine configuration files are stored in the VMFS partition by default."

Subscribe to:

Posts (Atom)